US luxury automobile brand Cadillac is one of the oldest and most recognizable vehicle marques in the world. It was founded by machinist, engineer and entrepreneur Henry Leland.

Henry Leland’s enterprise

Henry Leland’s enterprise

History of Cadillac started in 1902, when co-owners of the Henry Ford Company hired H. Leland to appraise their factory prior to liquidation. Having completed the evaluation, he, however, advised them to reorganize their business and build a new car he has designed. The factory was named Cadillac. The roots of the company’s name go back to the last name of Leland’s ancestor Antoine de la Mothe Cadillac, whose coat of arms was used as a base for the company’s crest.

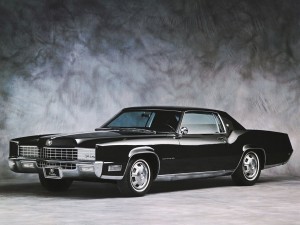

Cadillac Eldorado 1967Leland’s vehicle business was successful due to the use of interchangeable parts. This became the key principle of the modern automotive mass production. In 1908 Cadillac became the first American vehicle to win the Dewar Trophy of the Royal Automobile Club.

GMC takes over

GMC takes over

Since 1909 Cadillac history of Cadillac is bound with General Motors Company (GMC). Leland sold his business to GMC for $ 4.5 million, and remained an executive of the company for another 8 years. During this time Cadillac won its second Dewar Trophy in 1913 for incorporating electric engine starting. Cadillac introduced such technological advances as clashless transmission, full electrical system and V8 engine.